The Stack Overflow 2025 Developer Survey has dropped, and it paints a fascinating picture of where we really stand with AI in software development. Let's dive into the data.

Executive Summary

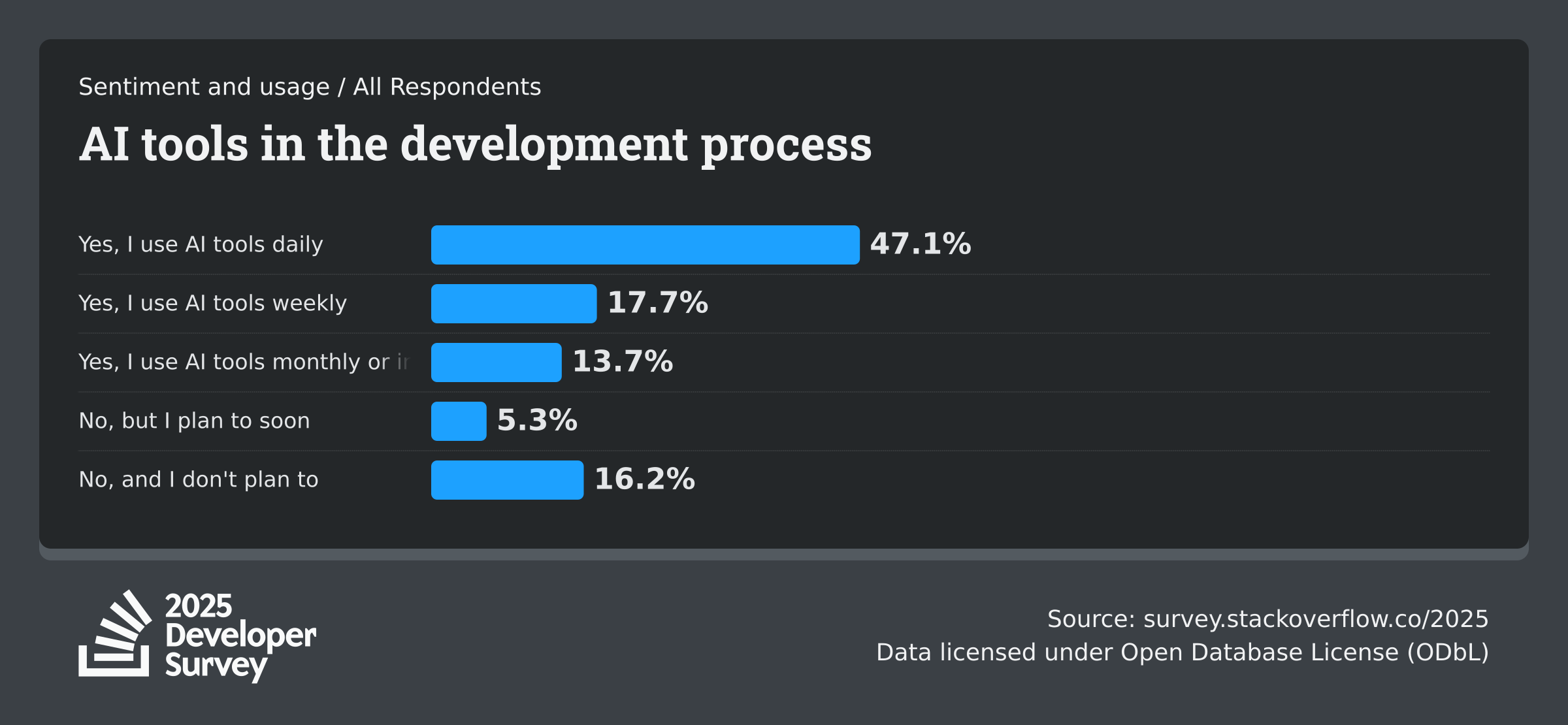

- 84% of developers are using or planning to use AI tools (up from 76% in 2024)

- 51% of professional developers use AI tools daily

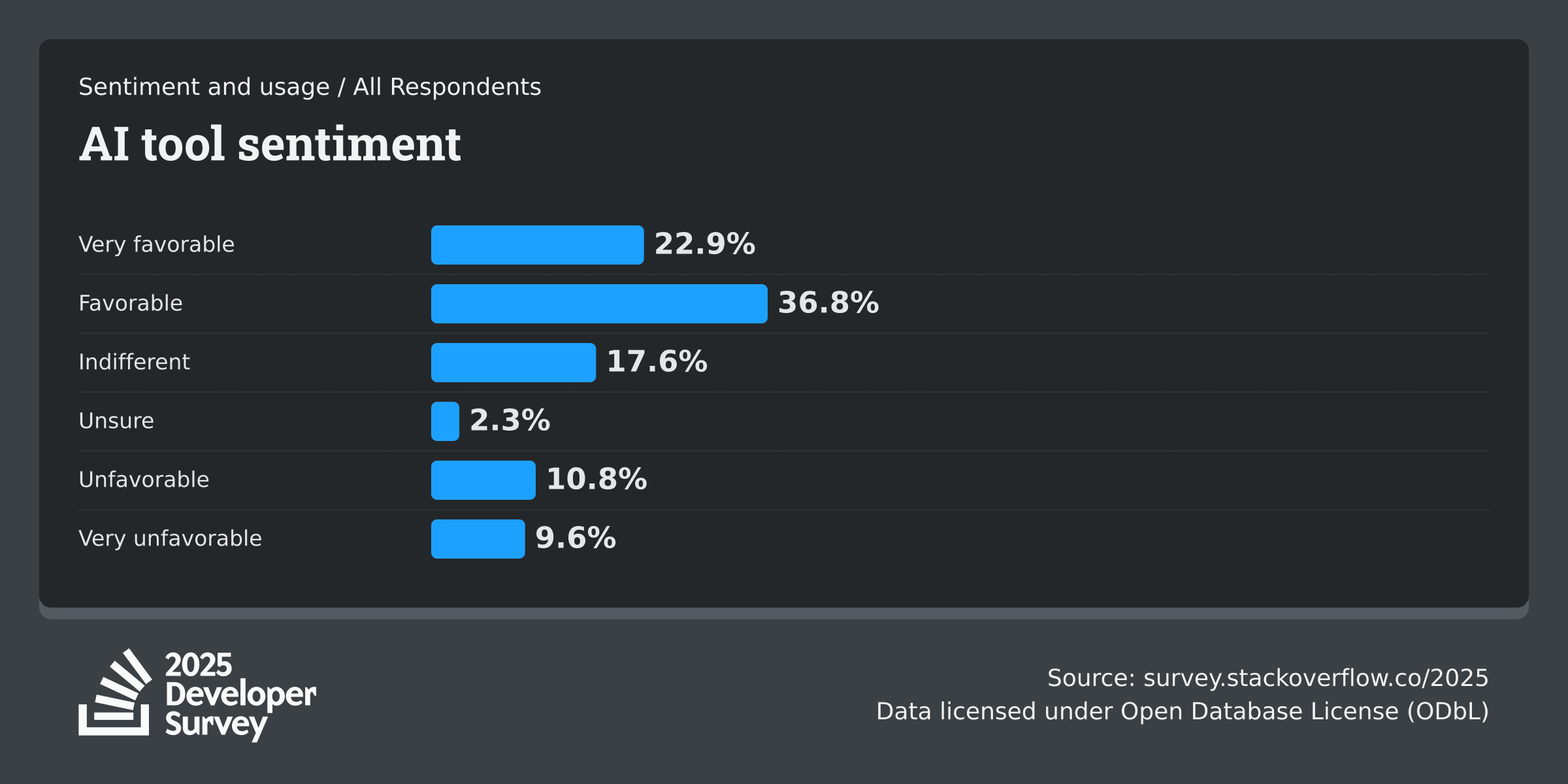

- BUT sentiment is dropping: Only 60% favorable in 2025 (down from 70%+ in 2023-2024)

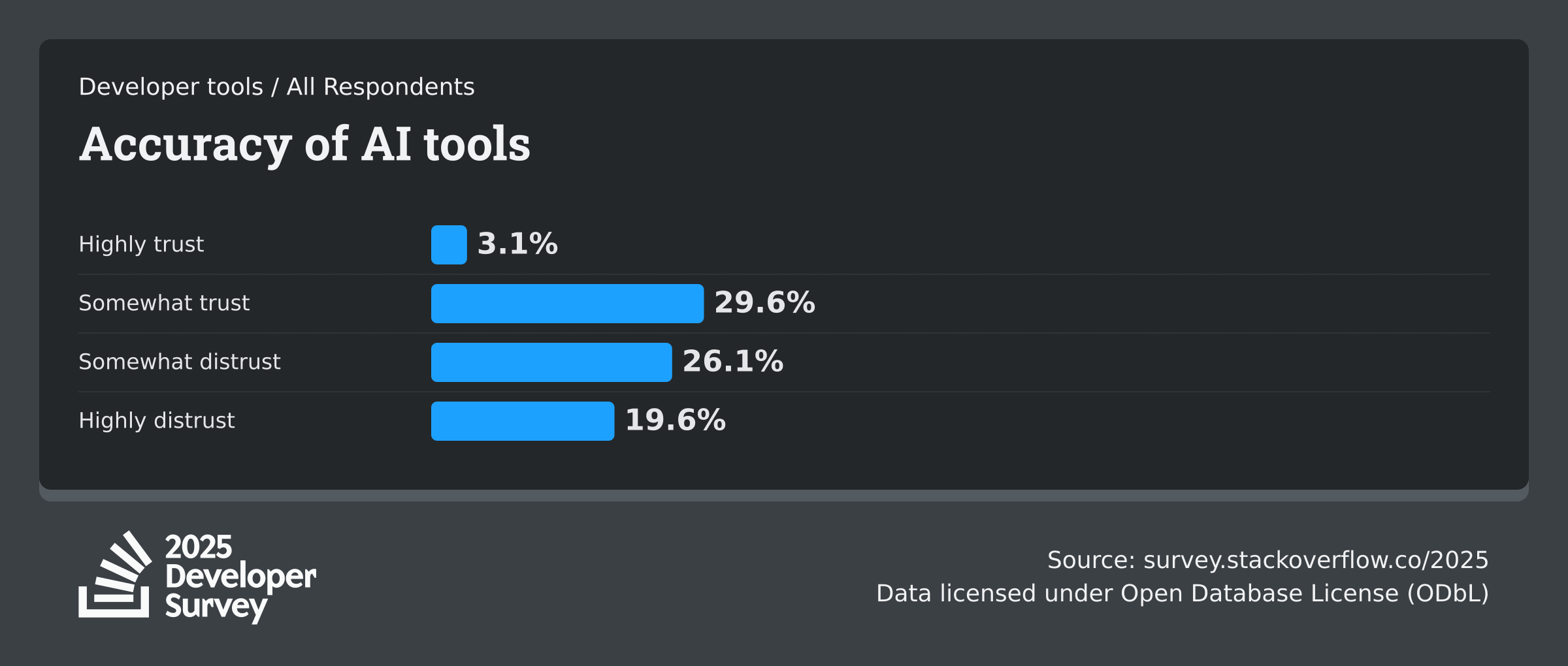

- 46% actively distrust AI accuracy vs. 33% who trust it

- AI agents remain niche: Only 31% use them regularly

AI adoption continues to surge, but the honeymoon phase is clearly over. The hype is doing more harm than good, and people expect so much more from these tools than they can deliver at this moment.

AI Tool Usage: The Numbers Keep Growing

The usage statistics show impressive growth across the board, with daily use approaching half of all respondents.

What jumps out to me here is how early-career developers have gone all-in on AI. Over half use it daily - the highest adoption rate among any group. Meanwhile, experienced devs with 10+ years of experience show the lowest daily usage and the highest resistance.

This split makes perfect sense from where I sit. When you've been around long enough, you've seen silver bullets come and go. You've also developed your own mental models and workflows that took years to build. Adding AI into that requires unlearning habits, and you need to be convinced it's worth it. Junior devs don't have those established patterns yet, so they're building AI into their workflow from day one.

The concerning part? Those learning to code show nearly 20% with no plans to use AI. These folks might be worried about teaching fundamentals with a crutch - and honestly, that's a legitimate concern.

The Sentiment Crash: Reality Sets In

Here's where things get interesting. While usage climbs, sentiment is falling off a cliff. The favorable ratings have dropped from 70%+ in 2023-2024 to just 60% in 2025. That's a significant decline in a single year.

From my perspective, using Claude Code daily, this tracks. The initial wow factor wears off quickly when you hit the limitations. The tools are undeniably practical, but they're also frustrating in ways that become more apparent over time. How many times can you tell it not to remove that line of code because it breaks your CI/CD pipeline, before you get off the rails? Knowing how to use and get the best out of a tool is really important, and AI is no different. That "almost right but not quite" phenomenon compounds daily.

What's particularly telling is that professional developers show slightly higher sentiment (61% favorable) than those learning to code (53% favorable). You'd expect the opposite: new learners excited about AI magic, veterans grumpy about change. Instead, it's the learners who are more skeptical. They're probably experiencing the dark side earlier: copying code they don't understand, struggling to debug AI-generated solutions, and wondering if they're actually learning anything. It helps, as always, to know what you are doing, and it shows in the stats.

The Trust Problem: "Show Me the Code."

When developers were asked how much they trust the accuracy of AI tools, the results were sobering. Only about 3% "highly trust" - almost nobody. Around 30% "somewhat trust," while distrust edges out trust overall.

Here's the reality from someone using AI tools every day: you learn not to trust them. Not because they're always wrong, but because you can never be sure when they're right. With Claude Code, I've had sessions where the code is perfect, and sessions where it confidently generates complete nonsense. The problem is that both look the same at first glance.

The experience gap here is fascinating. Senior developers (10+ years) show the lowest trust - only 2.5% highly trust, and over 20% highly distrust. We're the ones who have to fix production issues at 2 AM. We've learned the hard way that "trust but verify" isn't optional in software engineering.

Meanwhile, those learning to code show 6% "highly trust" - still low, but more than double the experienced rate. They haven't been burned enough yet. Please give them a few production incidents caused by AI-generated code they didn't fully understand, and those numbers will drop fast.

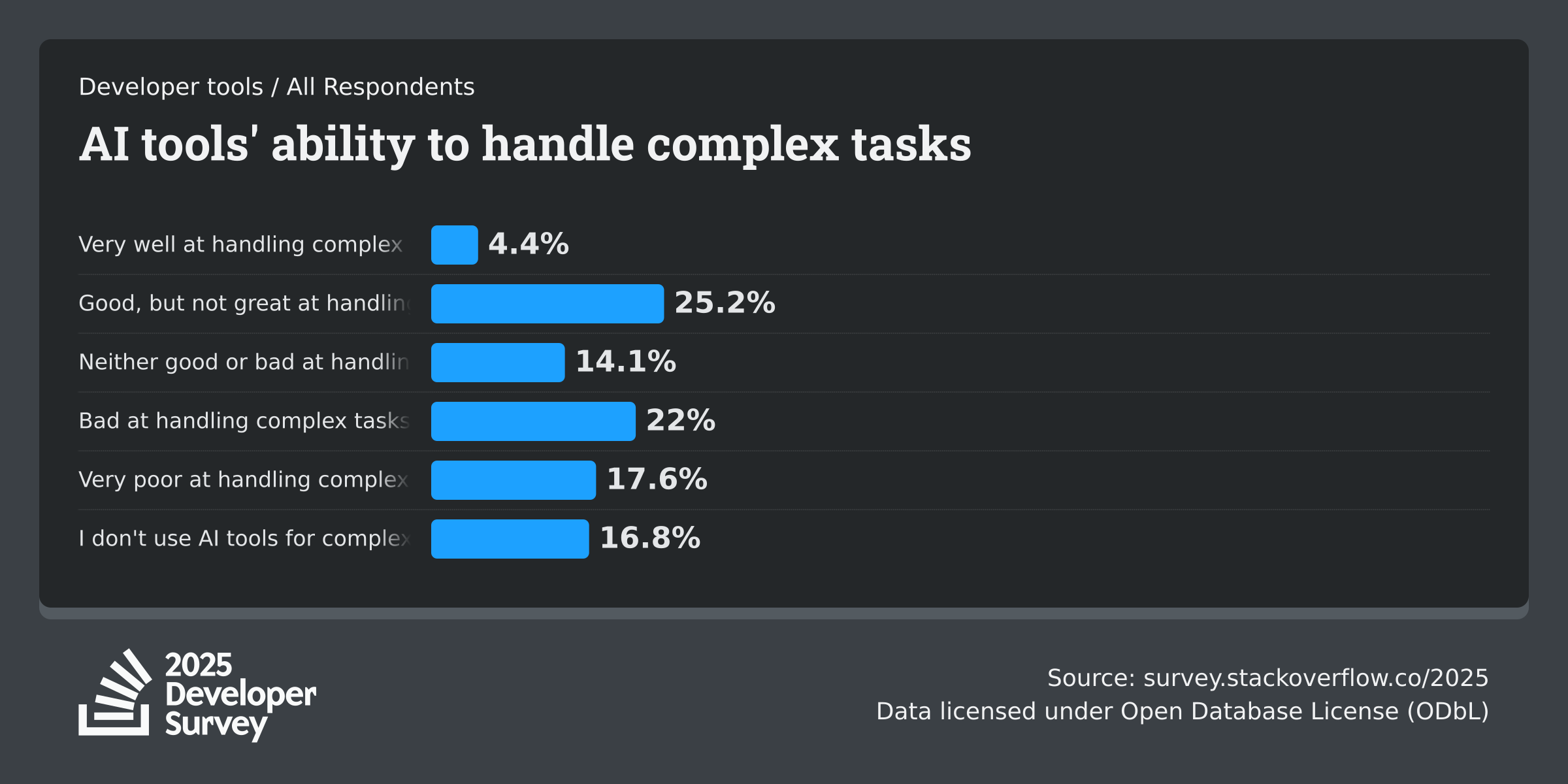

Complex Tasks: AI's Achilles Heel

How well do AI tools handle complex tasks? The data shows that only about 30% think AI does well, while 40% think it does poorly. An additional 17% have given up on using AI for complex work entirely.

This is where the rubber meets the road. Simple tasks like generating boilerplate, writing basic CRUD operations, and explaining syntax - AI handles these brilliantly. But throw a complex architectural decision, a subtle race condition, or a performance optimization problem at it, and the quality drops off a cliff.

In my experience with Claude Code, complex tasks require so much back-and-forth, so much correction and guidance, that I often wonder if I'm actually saving time. You end up playing "AI whisperer," crafting increasingly elaborate prompts to steer it in the right direction. At some point, you realize you could have just written the code yourself in the time you've spent prompting.

The improvement from 2024 (35% said AI struggled) to 2025 (29% among professionals) is notable but still leaves a significant gap. Complex tasks carry too much risk and require too much domain knowledge. You can't just generate-and-hope when a bug could cost thousands in downtime or worse.

Where Developers Use AI (And Where They Won't)

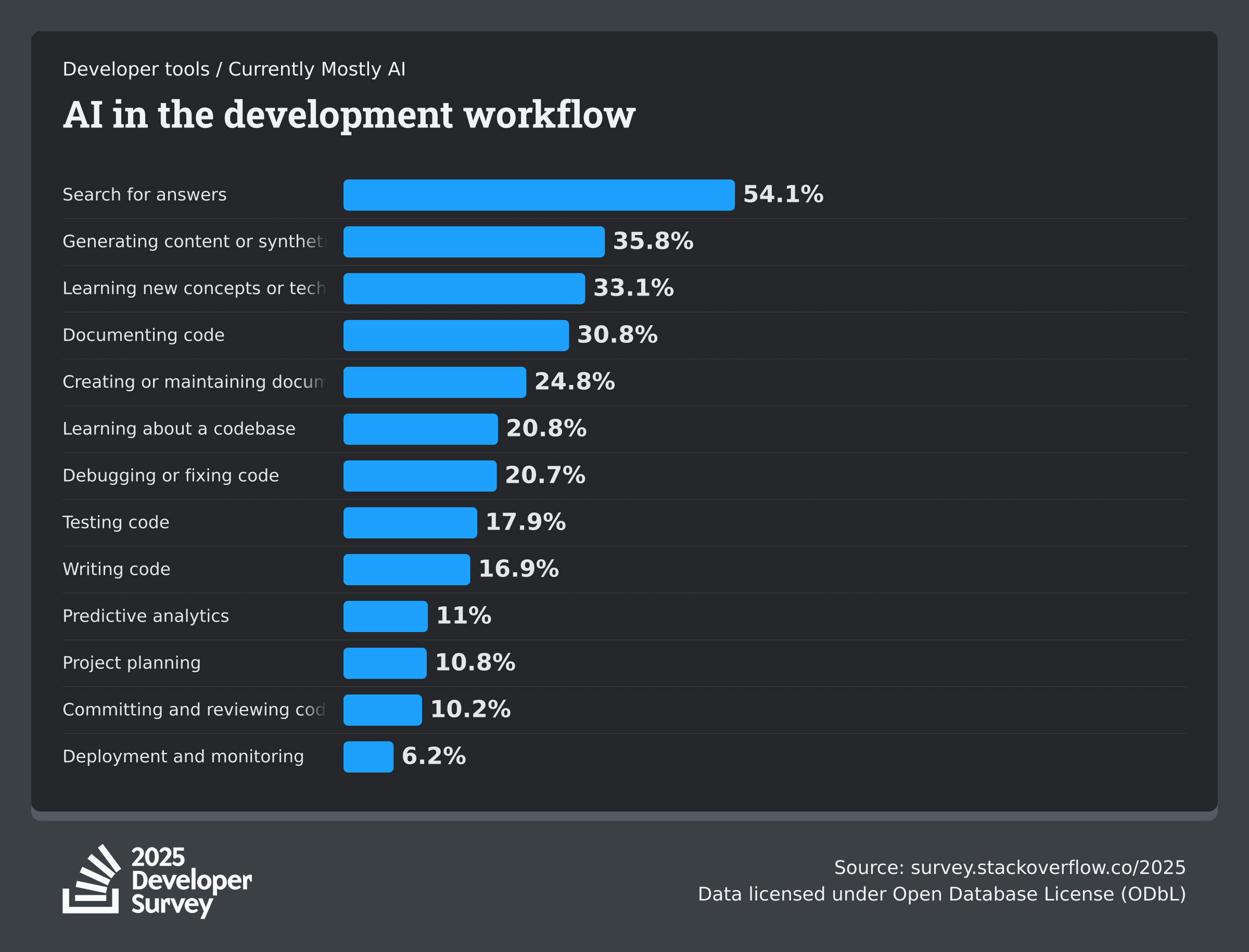

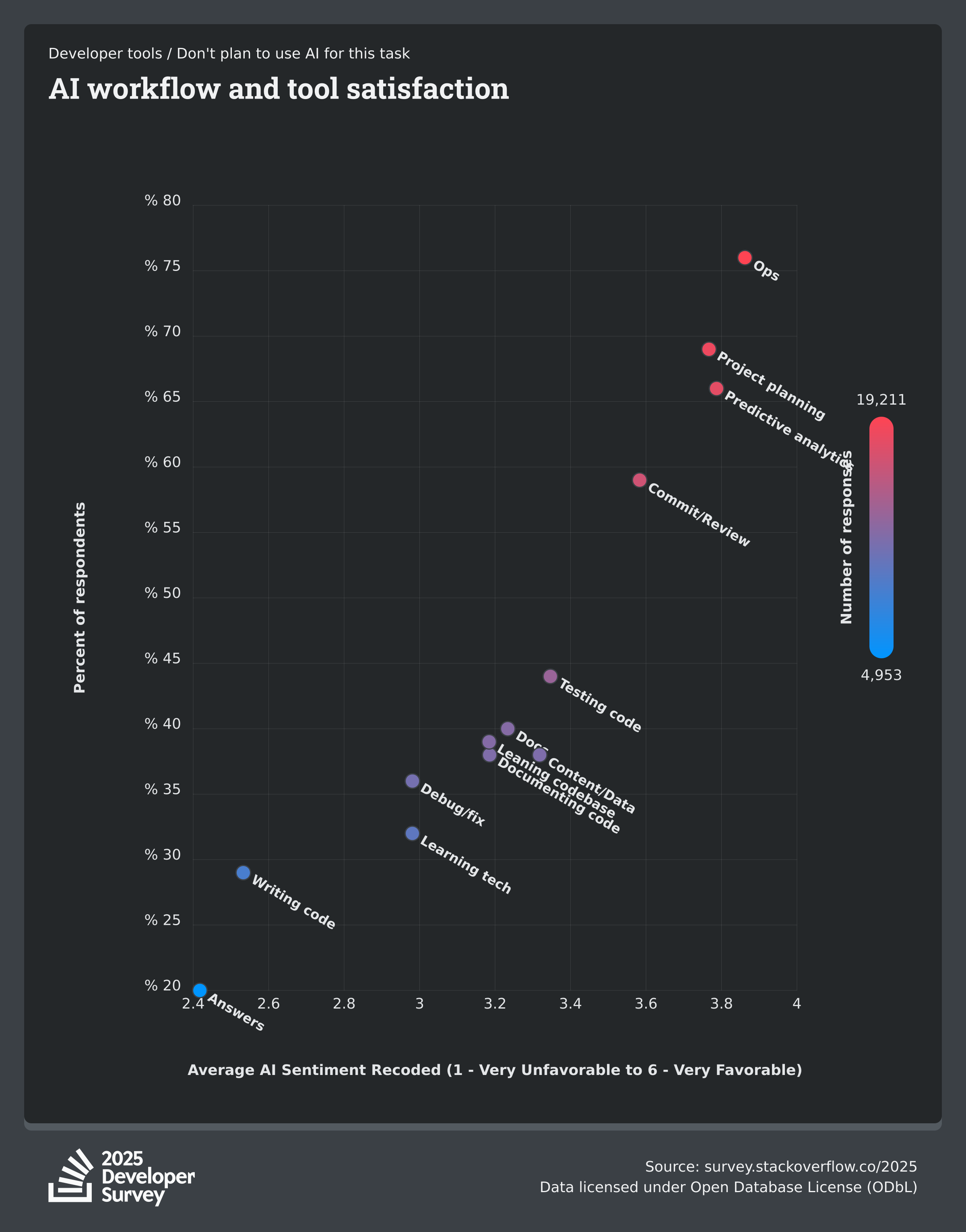

The survey asked developers which parts of their workflow they're integrating AI into. The results show clear patterns in what AI is trusted for and what it's not.

Search for answers currently dominates at over 50%, mainly using AI - this makes perfect sense. AI excels at aggregating information and explaining concepts, replacing or augmenting Stack Overflow searches. Generating content, learning new ideas, and documentation follow in the 25-35% range. These are all lower-stakes tasks where an "almost right" answer can be quickly corrected.

Tasks Where Devs Draw the Line

The flip side is more revealing. Three-quarters of developers don't plan to use AI for deployment and monitoring. Nearly 70% won't use it for project planning. Code review and predictive analytics are similarly off-limits for the majority.

As someone working in healthcare software, this resonates deeply. Deployment and monitoring? That's where your product lives or dies. A bad deployment can bring down production. A monitoring system that cries wolf (or worse, stays silent during an outage) is worse than no monitoring at all. These aren't tasks where "almost right" is acceptable.

Project planning requires understanding business Context, technical constraints, team capabilities, and political realities that AI can't grasp. And code review isn't just about syntax - it's about architectural decisions, maintainability, security implications, and team standards.

The pattern is clear: Developers use AI where it can accelerate work without significant consequences. But for anything that touches production, requires deep understanding, or carries real risk? They're keeping AI at arm's length.

The Frustration Factor

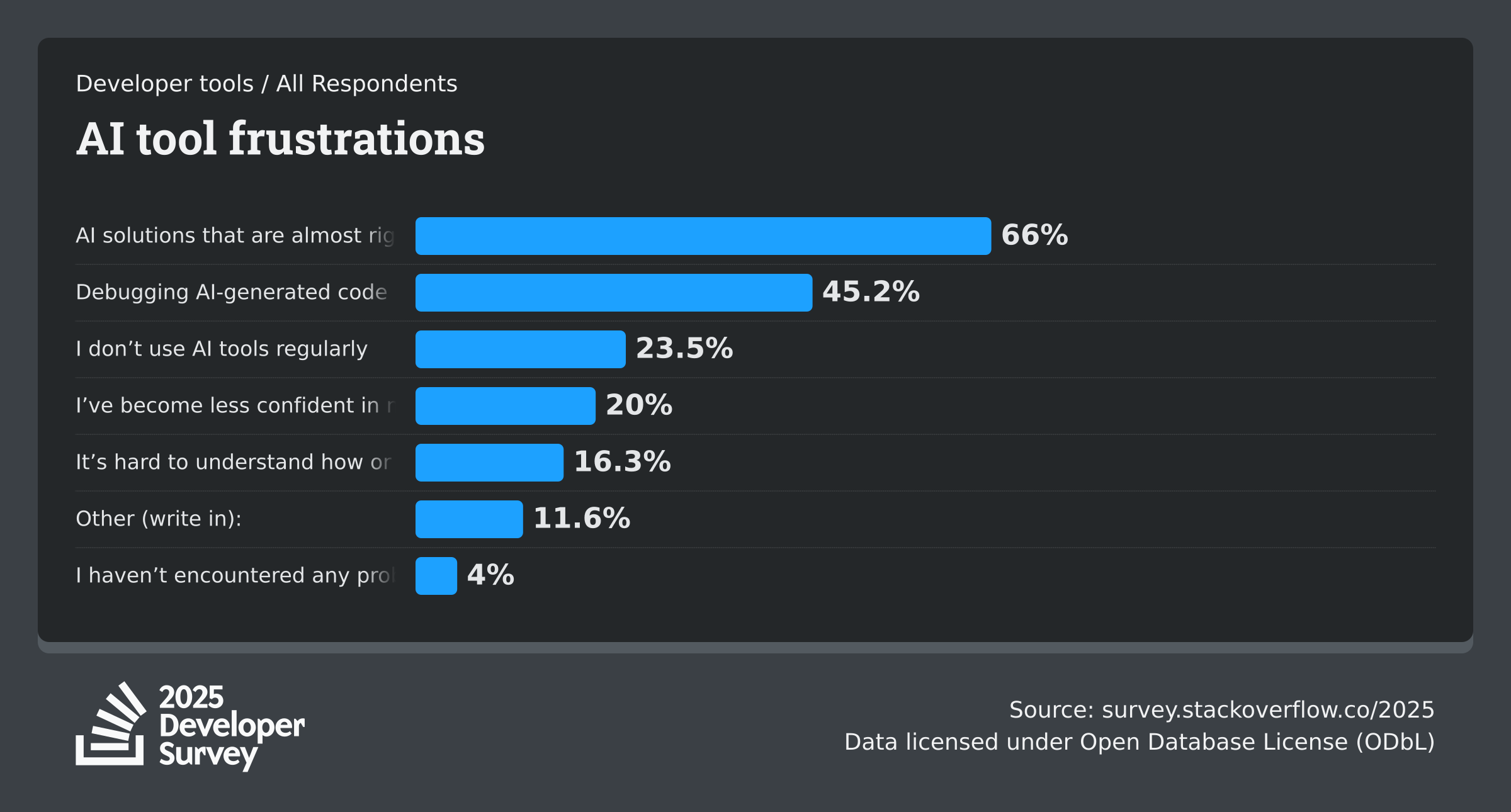

What drives developers crazy about AI tools?

What drives developers crazy about AI tools? The graph tells a clear story.

The biggest frustration - cited by two-thirds of developers - is dealing with AI solutions that are "almost right, but not quite." This is the killer. It's actually worse than getting completely wrong answers, because you invest time reviewing, testing, and debugging before you realize the subtle bugs. With Claude Code, I've lost count of how many times I've looked at generated code and thought "this looks good," only to find edge cases it missed or incorrect assumptions it made.

The second major frustration compounds the first: debugging AI-generated code takes longer than debugging your own code. This makes sense - you didn't write it, you might not fully understand the approach it took, and you're second-guessing every line, wondering what other subtle issues might be lurking.

But here's the stat that really concerns me: 20% report becoming less confident in their own problem-solving abilities. This is the dark side of AI assistance. Are we building muscle memory for prompting instead of coding? When you reach for AI for every problem, you stop developing the mental models that make you a strong engineer. For senior devs, we already have those models. But what about the juniors who are building their foundation on AI-generated code they don't fully understand?

Why Humans Still Matter

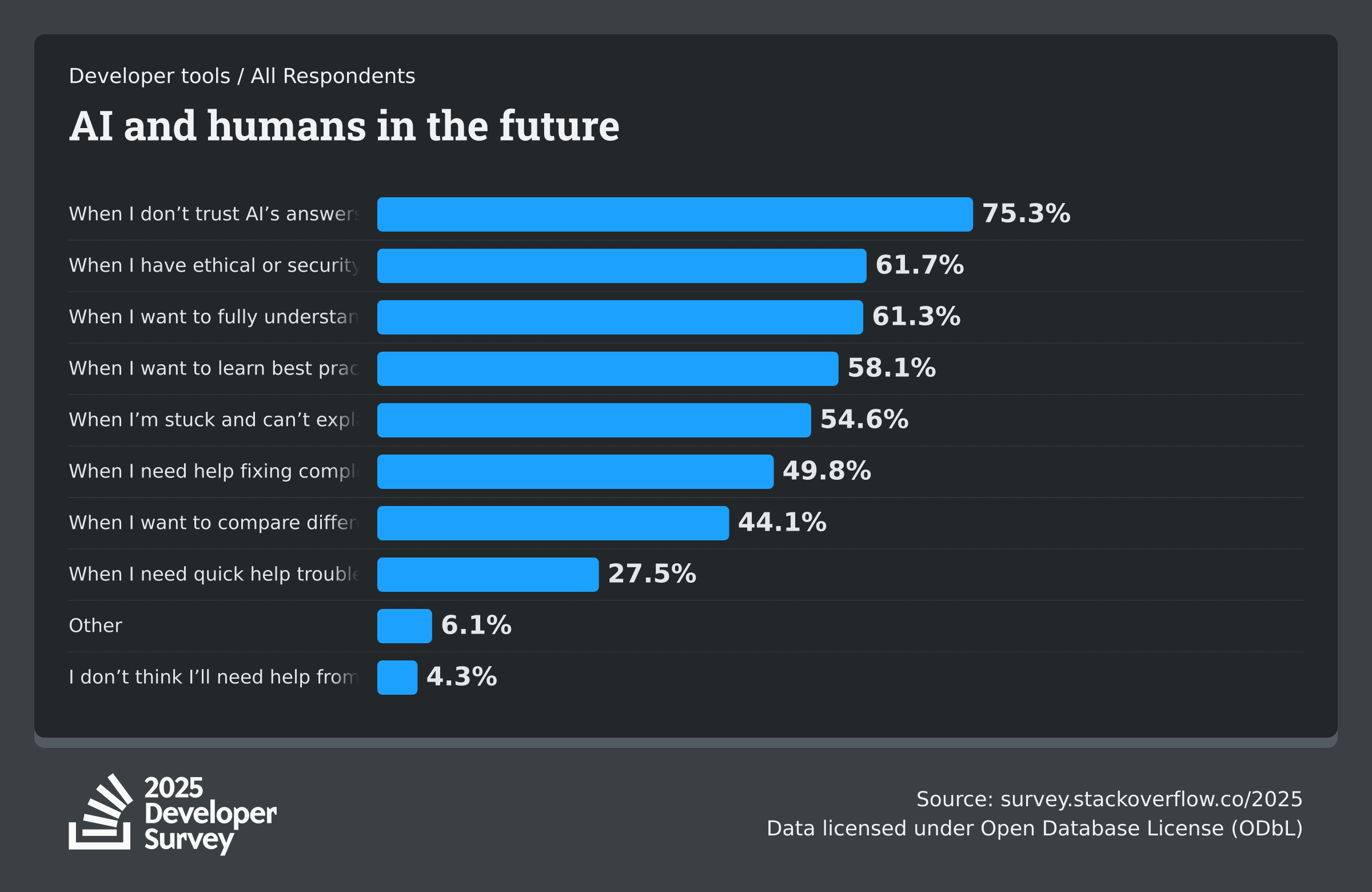

Developers were asked: In a future where AI can do most coding tasks, when would you still want help from another person?

Developers were asked: In a future where AI can do most coding tasks, when would you still want help from another person?

The top answer says everything: 75% would seek human help when they don't trust the AI. Even in a hypothetical future with far more capable AI, three-quarters of developers fundamentally don't trust it as the final authority. The other top responses cluster around similar themes - ethical concerns (62%), wanting to fully understand something (61%), and learning best practices (58%).

What this tells me is that developers see themselves as architects and verifiers, not as being replaced by AI. The human role becomes more critical, not less - we're the quality gates, the ones who understand the "why" behind the code, the ones who consider the second and third-order effects that AI misses.

This is exactly right from my experience. I use Claude Code to accelerate specific tasks, but I never unquestioningly accept its output. Every suggestion gets scrutinized through the lens of: Does this handle edge cases? Is this maintainable? What are the security implications? What happens under load? These are questions AI still struggles with, and they're the questions that separate good code from production-ready code.

The future isn't AI replacing developers. Above that is the narrative, ushered by all the big players in this space. The future is developers who are leveraging AI as a powerful tool while maintaining human judgment for what actually matters.

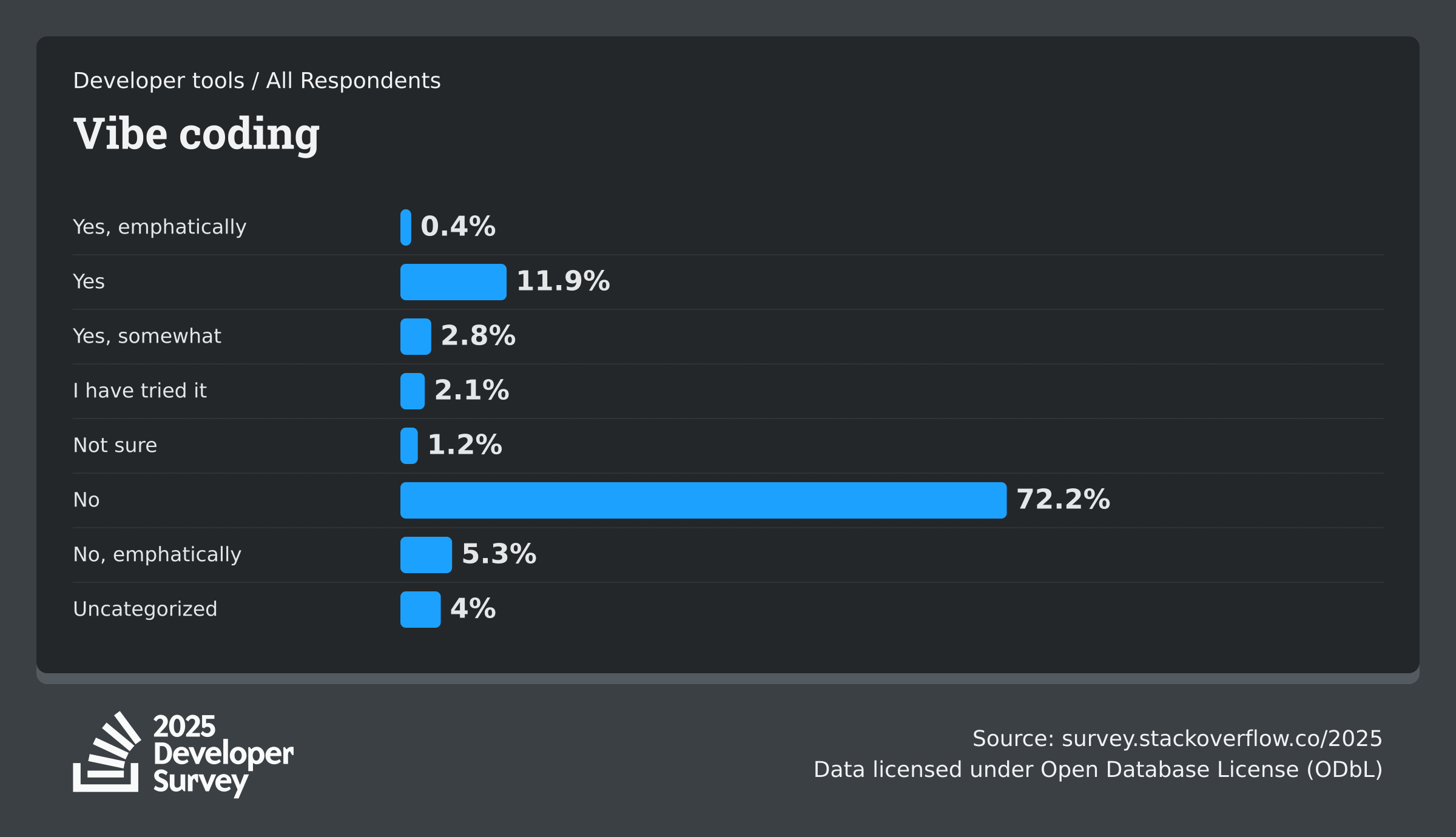

Vibe Coding: Not a Thing

"Vibe coding" - the practice of generating software primarily from LLM prompts - was explicitly asked about. The graph shows it's not happening.

About 15% of developers say they practice some form of vibe coding, while over 70% say no, with another 5% saying "no, emphatically." Even among younger developers (18-24), the numbers barely budge - still only around 15% yes.

This makes complete sense from my perspective. Generating code from prompts is fine for experiments, prototypes, or simple scripts. But professional software development involves so much more than just generating code. You need to understand the codebase, architecture, business requirements, performance characteristics, and security implications. You should think about maintainability six months from now, when someone else will have to modify your code.

Prompt engineering might be a helpful skill, but it's not a replacement for understanding software engineering fundamentals. Most developers see themselves as coders who use AI tools, not as prompt engineers who generate code.

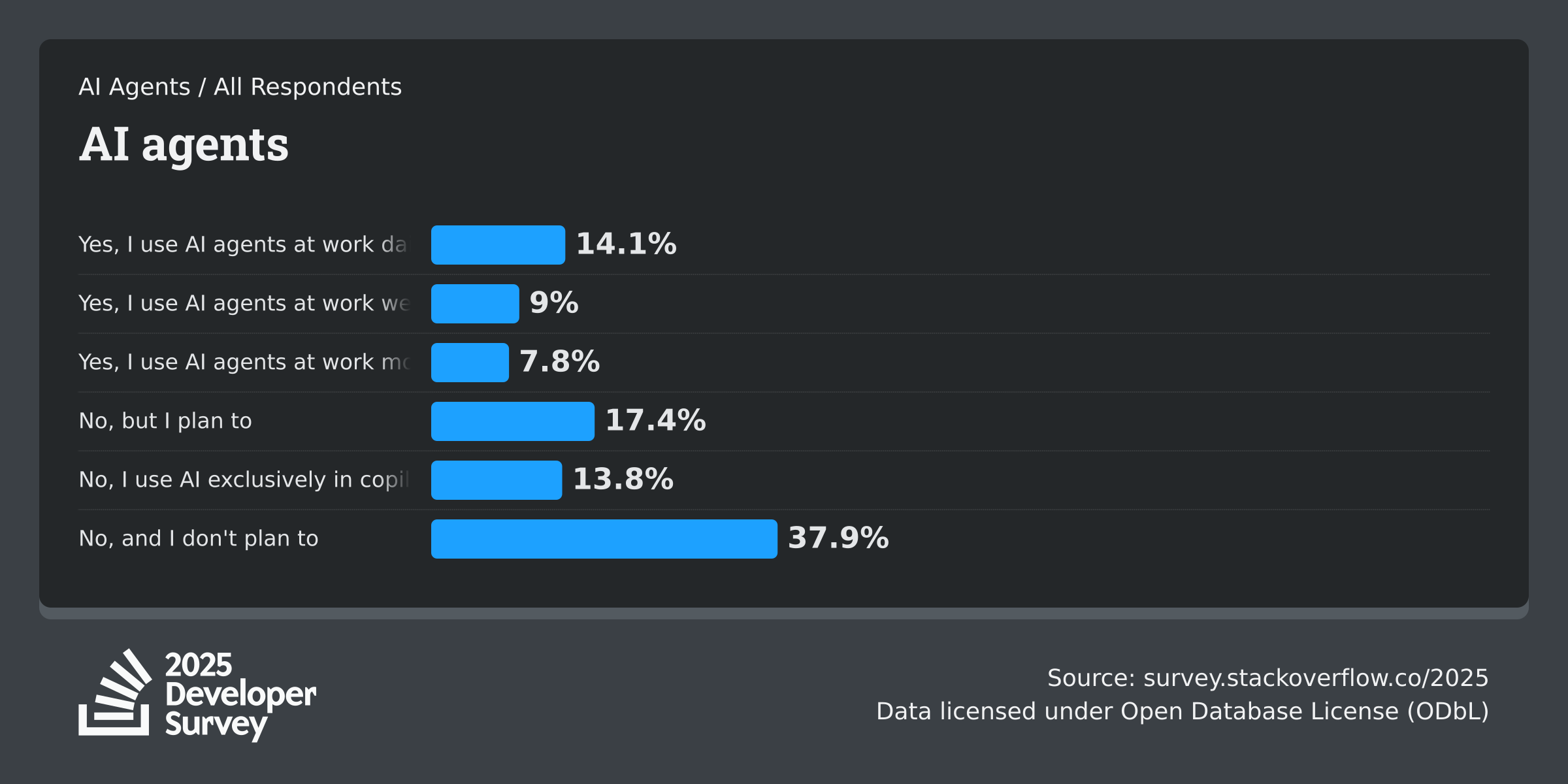

AI Agents: Still Niche, But Growing

AI agents - autonomous software entities that operate with minimal human intervention - are more advanced than simple AI assistants. How widely are they being used?

Looking at the data, about 31% are currently using agents to some degree, while 38% have no plans to adopt them. I fall into that second camp. Even though I'm a heavy user of Claude Code, I haven't jumped into agents yet.

Why? The trust issue is even more acute with agents. With AI assistants like Claude Code, I'm reviewing every suggestion, testing every piece of generated code, and maintaining control over what actually gets committed. Agents, by definition, operate with minimal human intervention - they make decisions and take actions autonomously. That's a much bigger leap.

The 17% who "plan to use" agents is interesting - it suggests curiosity but not urgency. And the 14% using agents in copilot/autocomplete mode only shows that many are dipping their toes in without going full autonomous.

As someone working on production healthcare systems at ICANotes, where bugs have real consequences, I need to see agents mature significantly before I'd trust them with autonomous operations. The copilot model works because I'm still the one making decisions. Agents flip that relationship, and I'm not convinced the technology is there yet.

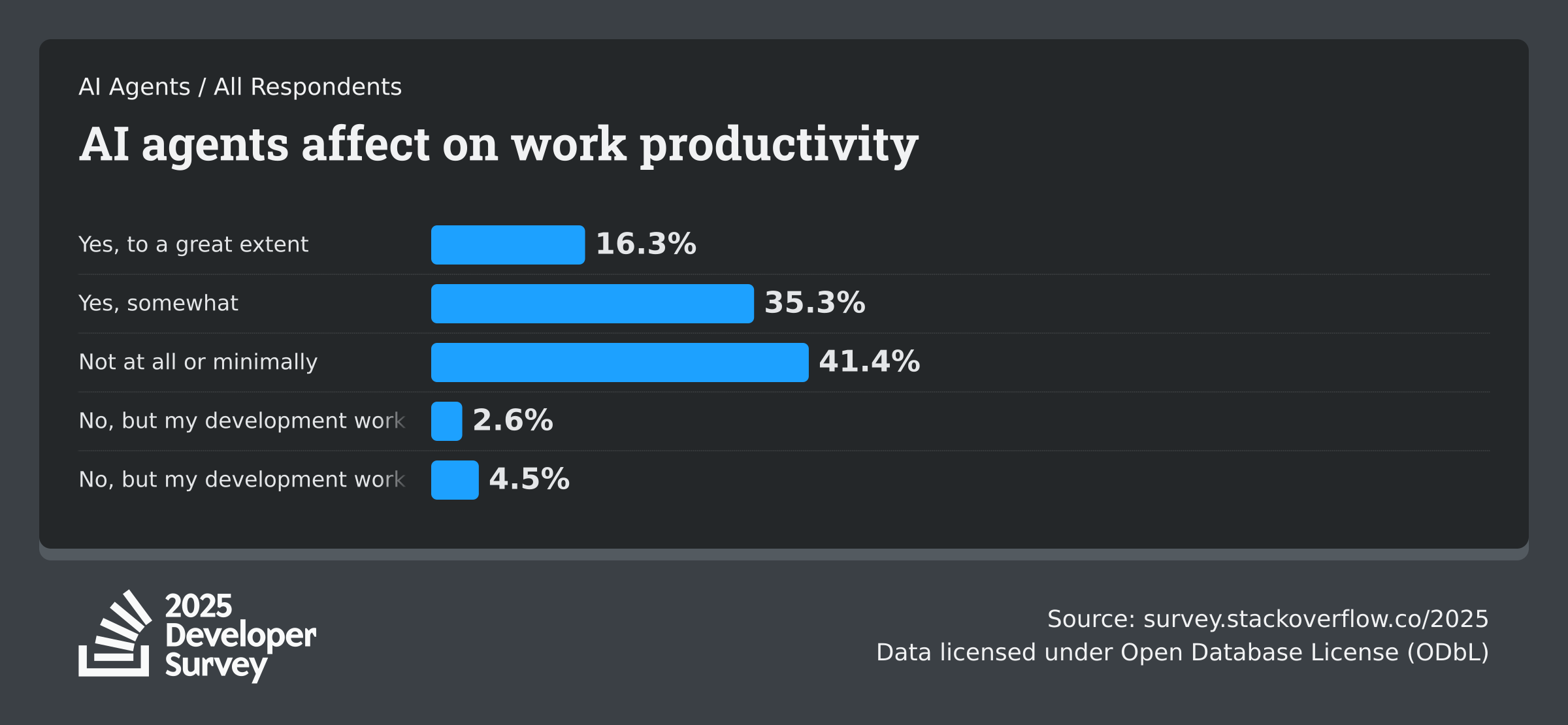

AI Agents: Productivity Impact

Have AI tools or agents changed how developers work?

The graph shows just over half report positive productivity impact to some degree, with about 16% saying "to a great extent" and 35% saying "somewhat." But what stands out to me is the 41% who say AI has had minimal or no impact on their work.

This 41% is the reality check. For many developers, AI tools are nice-to-haves, not game-changers. And honestly, that tracks with my experience. Some days, Claude Code saves me significant time - generating boilerplate, explaining unfamiliar APIs, writing test cases. Other days, I spend more time correcting AI suggestions than I would have just writing the code myself.

The "to a great extent" number at 16% is telling. These are the developers who've figured out the sweet spot - specific use cases where AI genuinely shines, combined with workflows that minimize the frustrations. Or they're working on tasks that AI handles well (lots of CRUD operations, standard patterns, well-trodden paths). It helps a lot to use widely adopted techs, like likee.js or Java. It's making a world of difference if you are in a small niche or just making websites with Next.js and React.

But 41% seeing minimal impact suggests that for complex, domain-specific, or novel work - which is what many senior developers spend most of their time on - AI hasn't fundamentally changed the game yet.

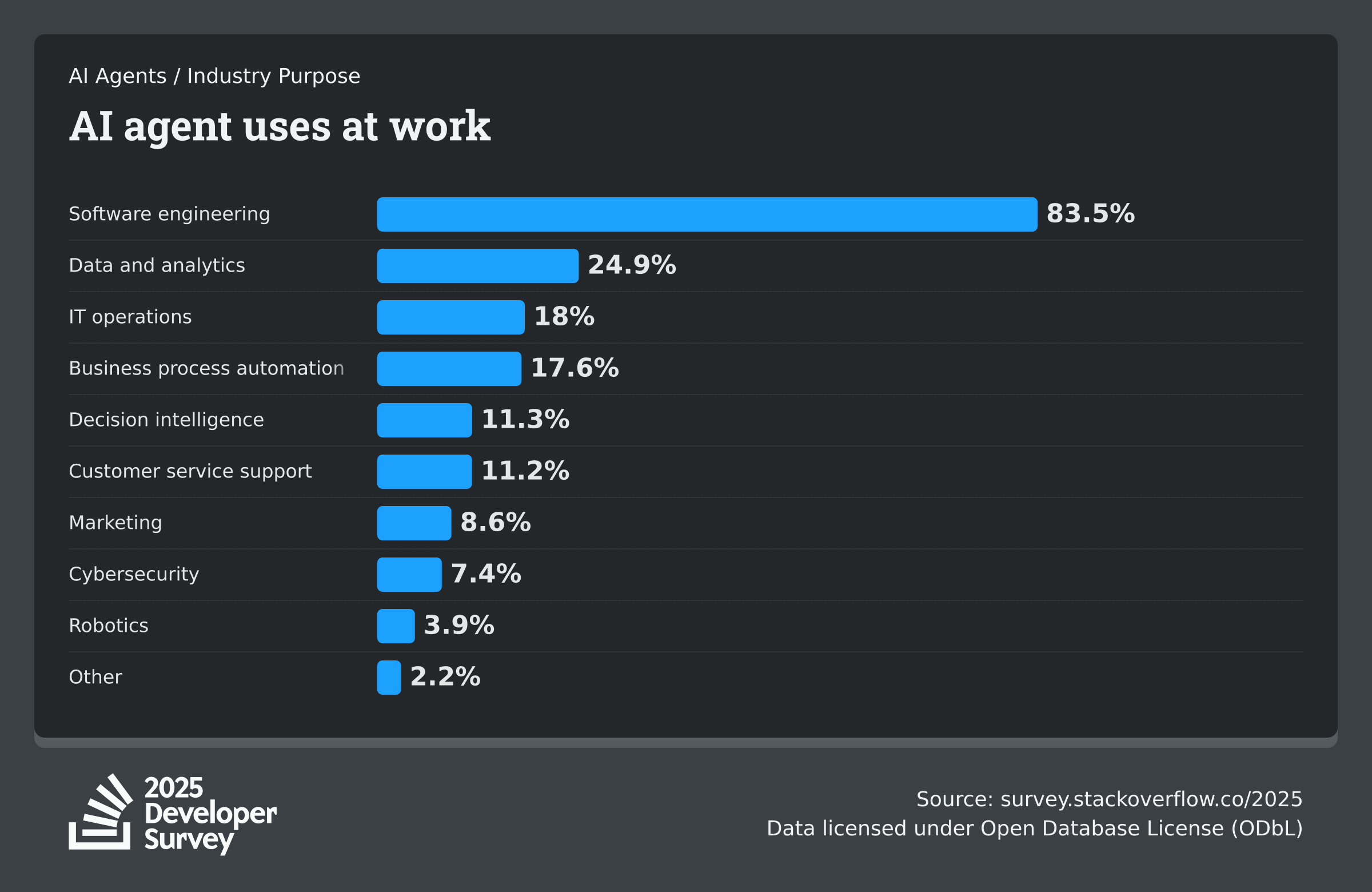

What Developers Use Agents For

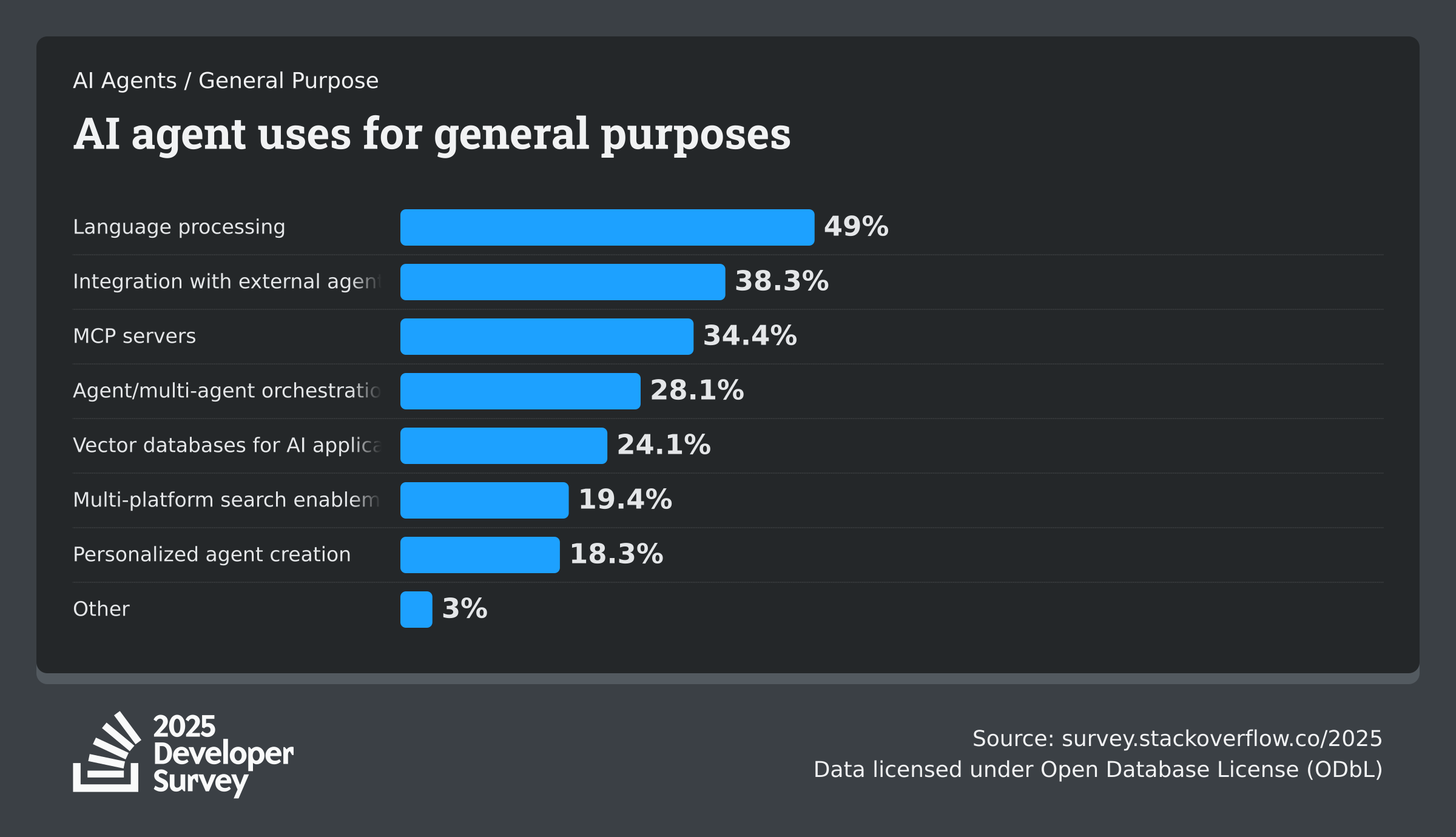

The survey asked agent users what they're doing with them:

Industry Purposes

Software engineering absolutely dominates, which makes sense given the developer-heavy survey audience. The gap between software engineering and other use cases is massive; developers are primarily using agents for what they know best.

General Purposes

Language processing leads among general-purpose applications, followed by integration with external agents and APIs. MCP servers and agent orchestration are also seeing significant use. The general purposes reveal that language processing is the killer app for agents right now.

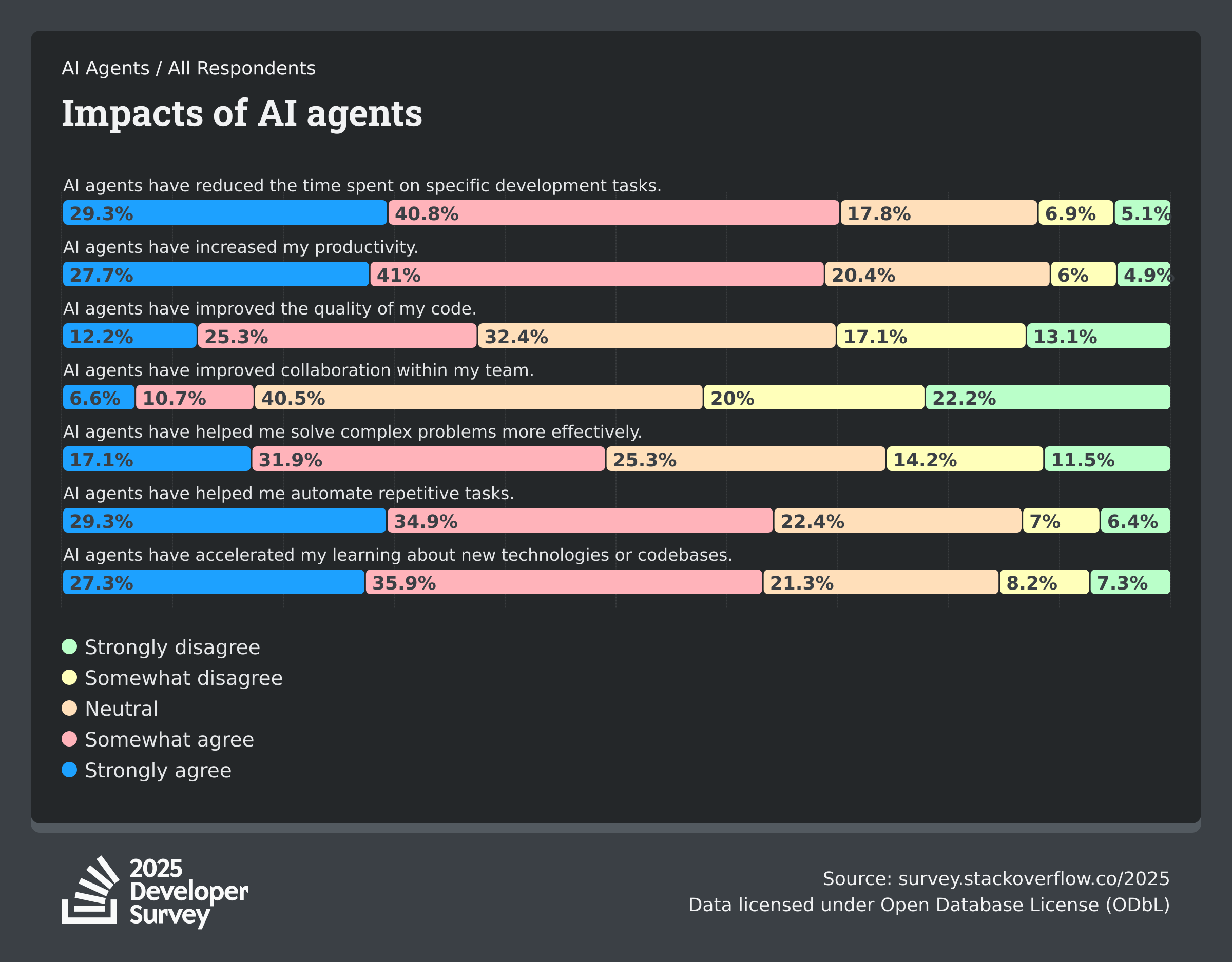

Agent Impact: Personal Wins, Team Struggles

When asked about the impact of AI agents, developers reported strong agreement on personal productivity benefits. Around 70% agree that agents have reduced time on specific tasks and increased overall productivity. Similar majorities - in the 60% range - report accelerated learning and help with automating repetitive tasks. However, the benefits drop off sharply for code quality improvement (under 40% agreement) and solving complex problems (just under 50%).

The pattern is striking; agents excel at personal productivity gains but struggle with team-level impact. Improved team collaboration scored by far the lowest, with less than one in five developers agreeing that agents have helped in this area. This makes sense. Agents are tools that individual developers use, but effective team collaboration requires shared Context, communication, and alignment, areas where AI still struggles.

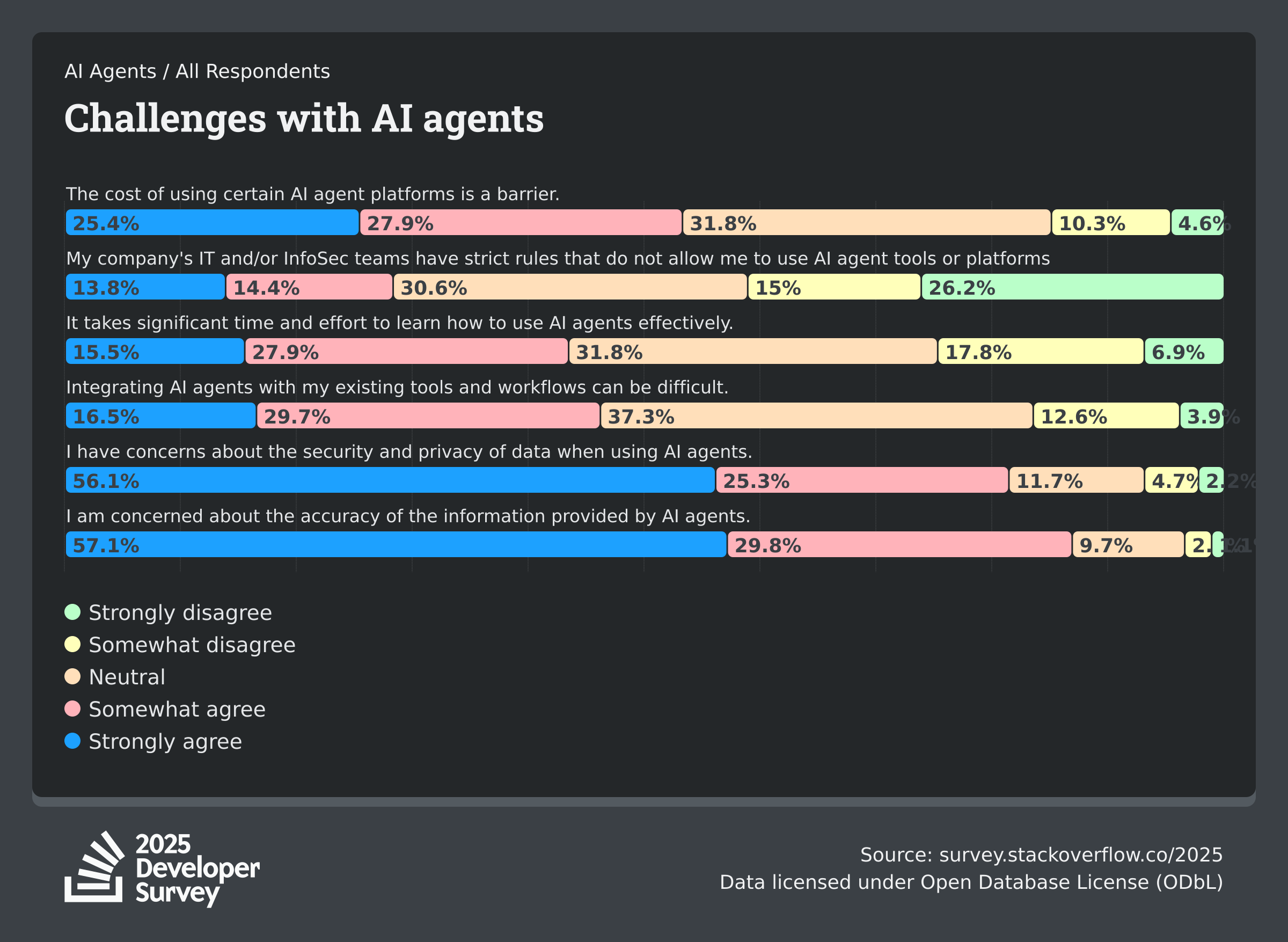

Agent Challenges: Trust Is Everything

What concerns do developers have about AI agents?

The concerns are substantial and widespread. Nearly 90% express concern about accuracy, while over 80% worry about security and privacy. These top two concerns dwarf everything else; they're fundamental questions about whether agents can be trusted in professional environments. The level of confidence required to give an agent permission to perform operations on a production database is staggering. Secondary concerns include cost barriers (just over half cite this), integration difficulties (approaching half), and the learning curve required (just under half). Interestingly, company restrictions rank lower, with less than a third reporting this as a barrier.

The Agent Tech Stack

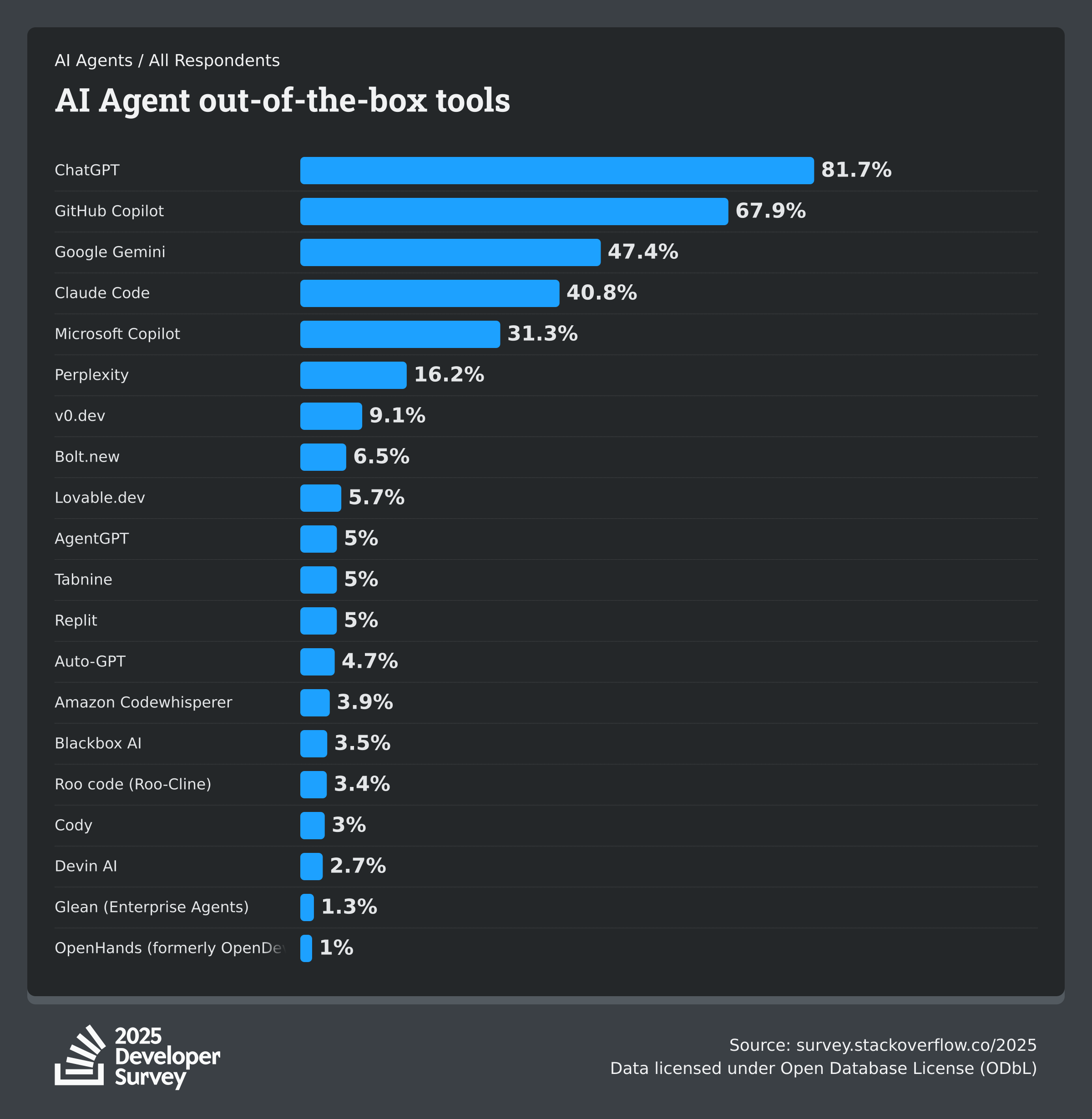

For developers building or using agents, specific tools have emerged as favorites:

Out-of-the-Box Tools

The market leaders are clear: ChatGPT dominates with over 80% adoption, followed closely by GitHub Copilot at over 70%. Google Gemini has captured nearly half the market, while Claude Code has achieved impressive adoption in less than a year. These tools represent the primary entry points for most developers using AI assistance.

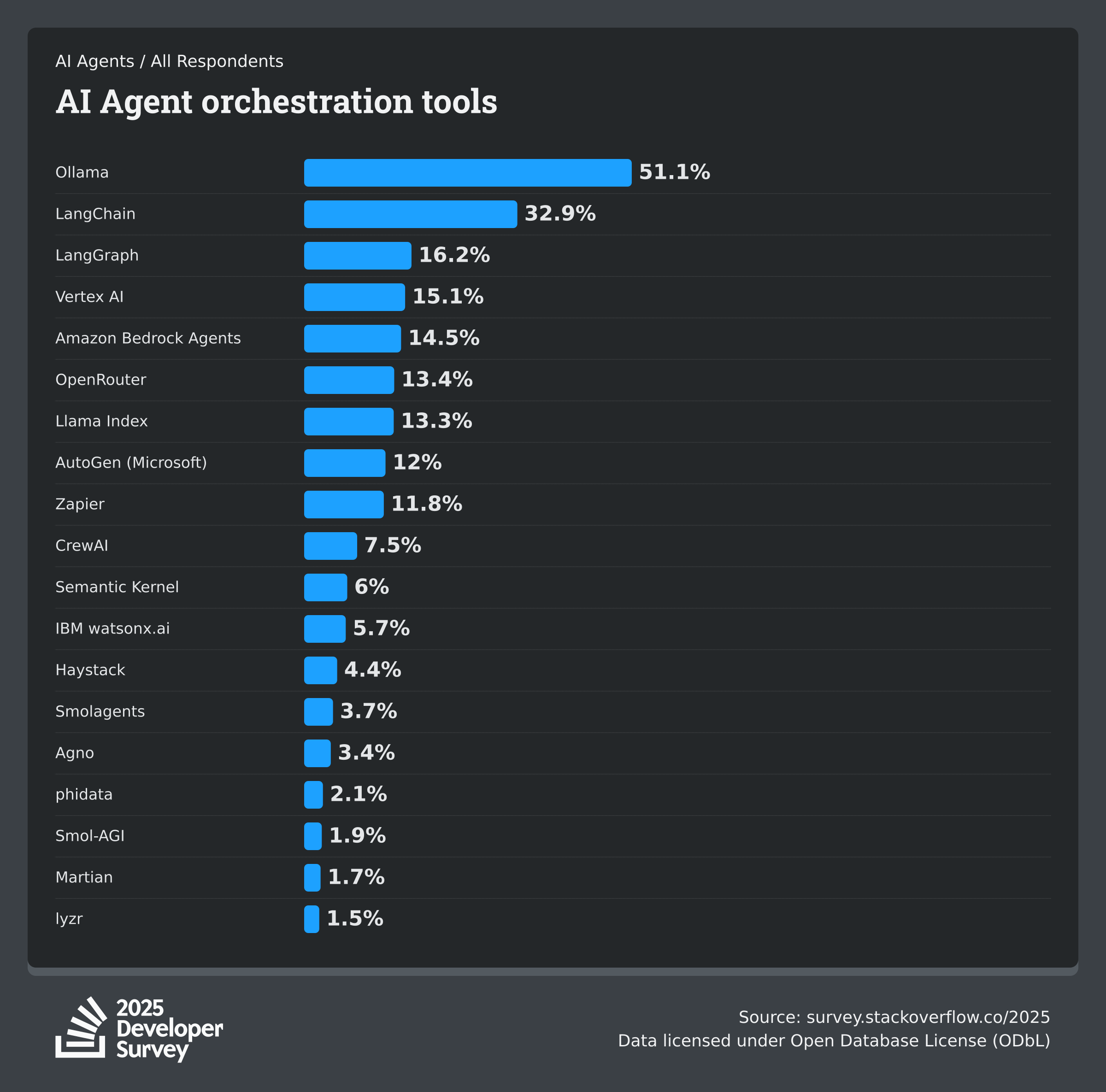

Agent Orchestration

Open-source tools dominate the orchestration landscape. Ollama leads significantly, positioning itself as the go-to framework for agent development, with LangChain as the second major player. The dominance of open source here is striking; developers building agents clearly prefer tools they can inspect, modify, and self-host over proprietary cloud solutions.

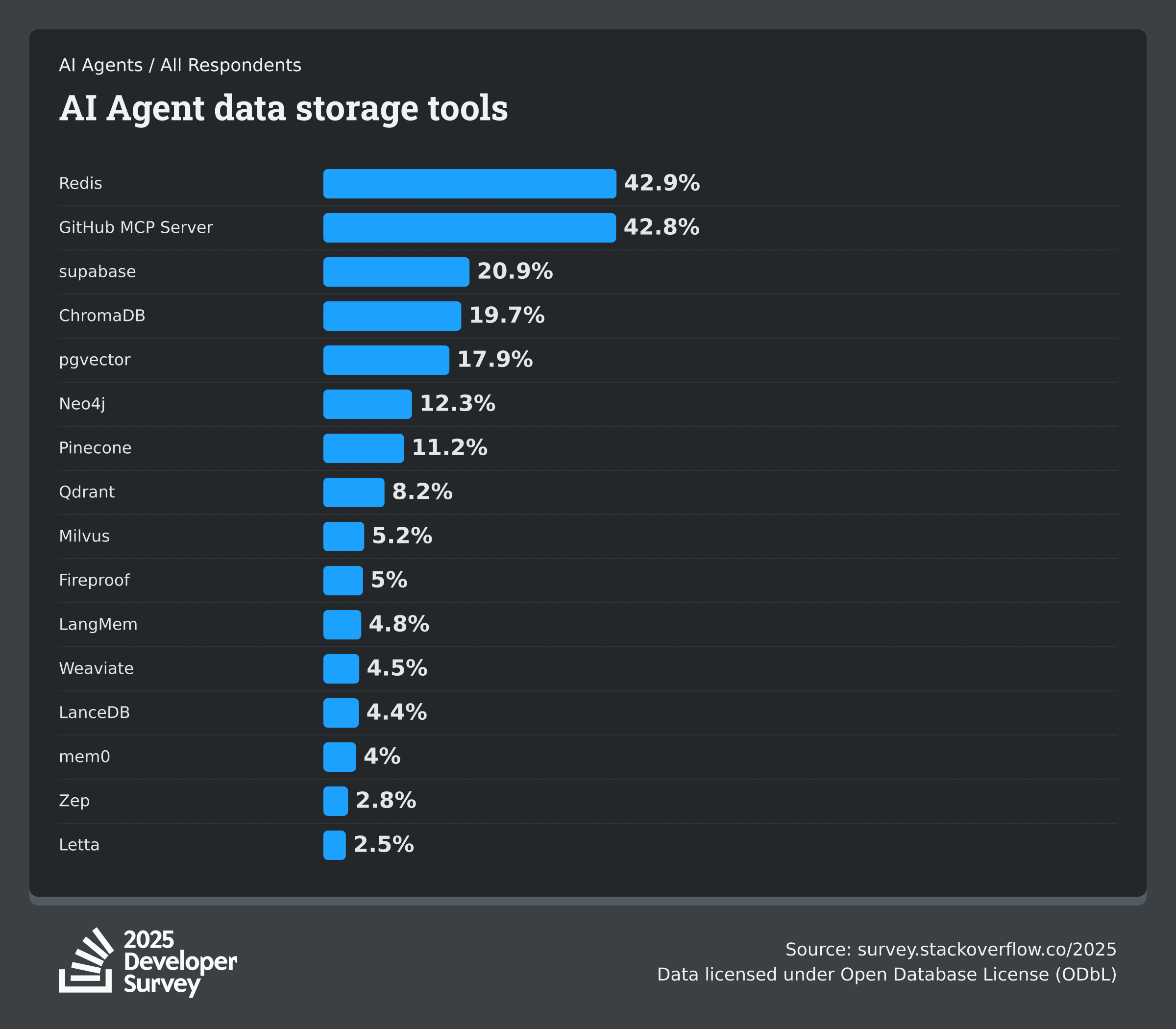

Data Management

When it comes to data storage for AI agents, Redis and GitHub MCP Server are nearly tied for the top spot. Traditional, battle-tested tools are being repurposed for AI workloads, while newer vector-specific databases like ChromaDB and pgvector are gaining traction but haven't yet overtaken the established players. I plan to use pgvector in a future project, so that I will write more about it then.

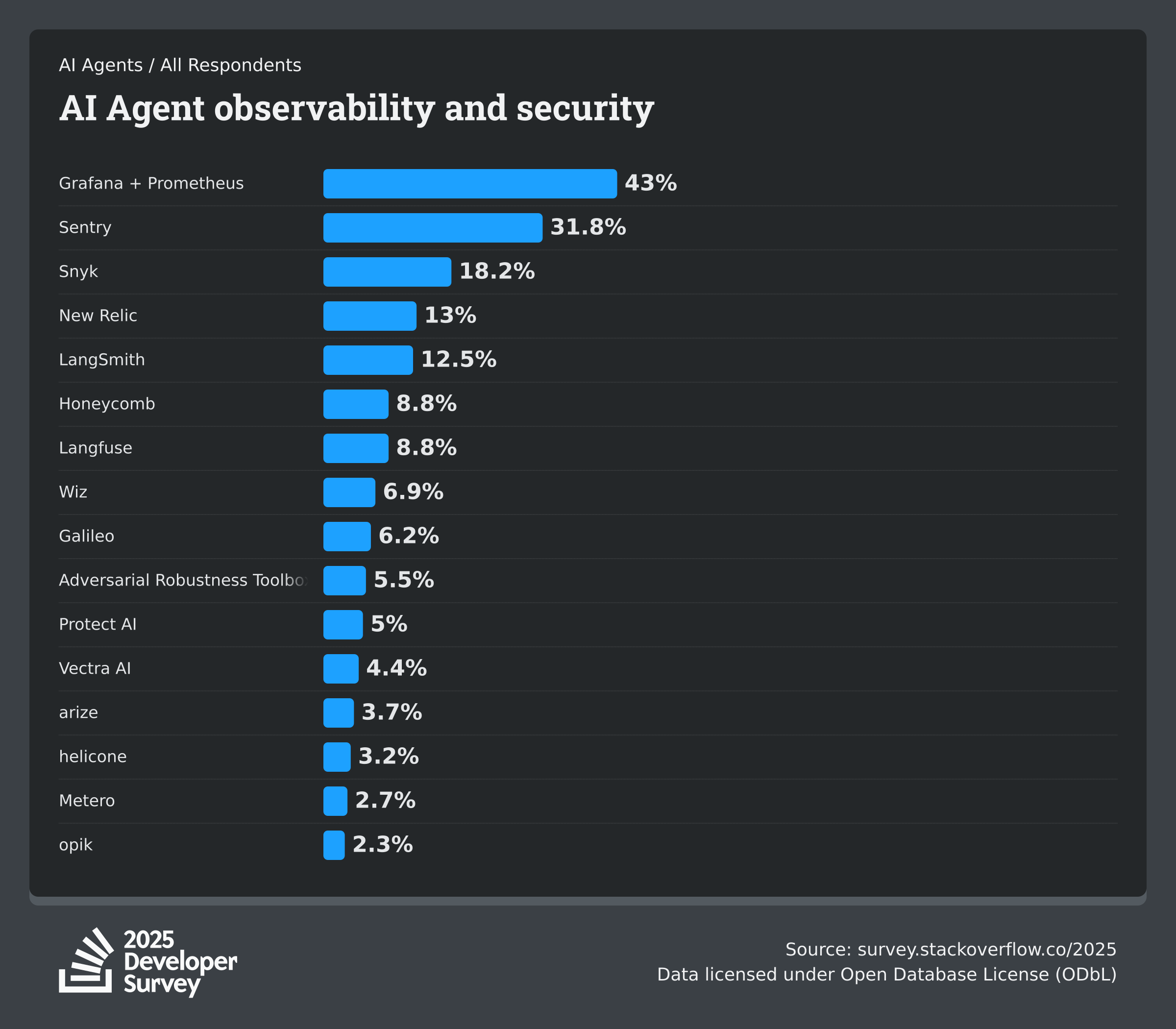

Observability

Rather than adopting new AI-native monitoring solutions, developers are adapting their existing DevOps toolkit. The Grafana and Prometheus combination leads the pack, showing that traditional infrastructure monitoring translates well to AI workloads. Sentry and Snyk follow, bringing their application monitoring and security scanning expertise to the AI space. This pattern suggests developers trust proven tools over newer, AI-specific alternatives.

Key Takeaways: What This Means for Developers

The gap between usage and sentiment: 84% using or planning to use AI, but only 60% view it favorably. Developers are using AI because they feel they have to, not because they love it. The trust crisis: 46% actively distrust AI accuracy and 87% are concerned about agent accuracy. This won't be solved by making models bigger - it requires better tooling, validation, and transparency. Clear boundaries: Developers happily use AI for documentation, learning, and searching for answers. But for high-responsibility tasks like deployment, monitoring, and architecture decisions, they're keeping AI out. The "almost right" problem: The #1 frustration is AI solutions that are almost correct but not quite. This is harder to solve than achieving 90% accuracy - it's about that last 10% being different every time. The same prompt produces different outputs, breaking our traditional understanding of programming as an exact science. Humans as gatekeepers: 75% would still seek human help when they don't trust AI. Developers see themselves as architects and verifiers, not being replaced by AI, but using it as a powerful (if sometimes frustrating) tool. Agents are still early: Only 31% use agents regularly, and concerns about accuracy and security are massive. The technology shows promise but needs to mature significantly before reaching mainstream adoption. Experience creates skepticism: Early career developers (1-5 years) are the most enthusiastic adopters, while experienced developers (10+ years) remain cautious. This creates an interesting dynamic where the most AI-dependent developers may also be the least equipped to validate AI output.

My Take

As someone who's been in the trenches with both traditional development and AI tools, this survey data rings true. AI coding assistants are genuinely helpful, but they're also genuinely frustrating.

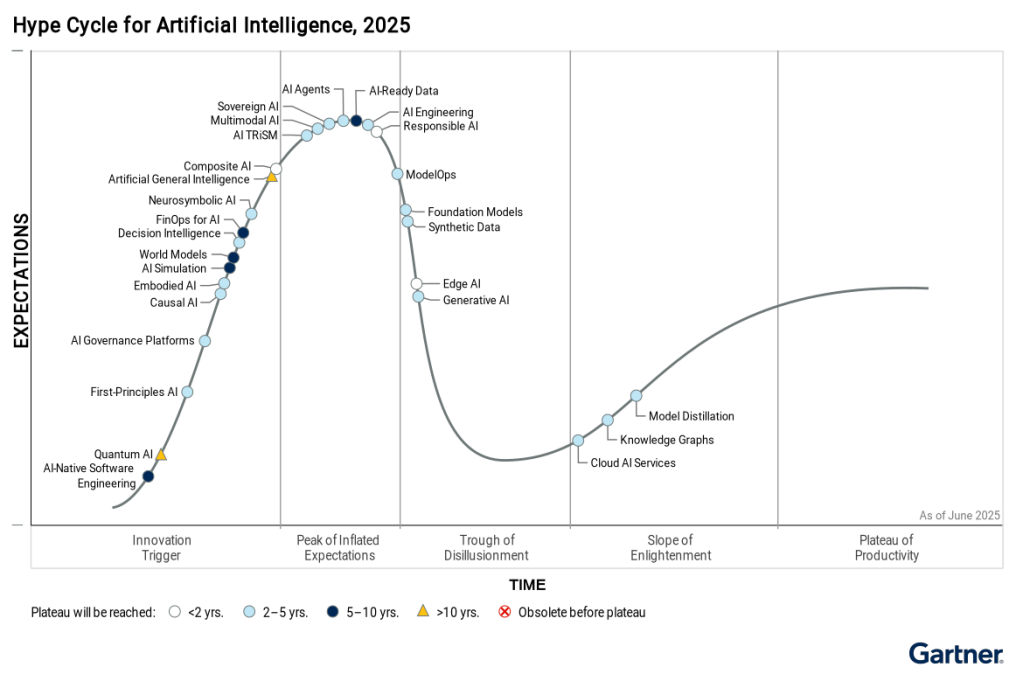

The honeymoon is definitely over. We're in the "trough of disillusionment" phase, where the initial hype has worn off, and we're dealing with the actual limitations. That's not a bad thing - it's a necessary phase of any technology adoption.

The trust deficit is the real story here. Until AI tools can be consistently accurate and transparent about their limitations, they'll remain assistants rather than replacements.

For junior developers betting heavily on AI, my advice is: Use it, but don't depend on it. Make sure you understand the fundamentals. Because when the AI gives you code that's "almost right but not quite," you need to know enough to fix it.

For companies building AI tools, the message is clear: Focus on accuracy, transparency, and trust. Speed and capabilities matter, but if developers don't trust your output, they won't use it for anything important.

References

- Stack Overflow 2025 Developer Survey - AI Section

- Survey Methodology: The Stack Overflow 2025 Developer Survey received 49,000+ responses from developers worldwide. All charts and data are from Stack Overflow and licensed under the Open Database License (ODbL).