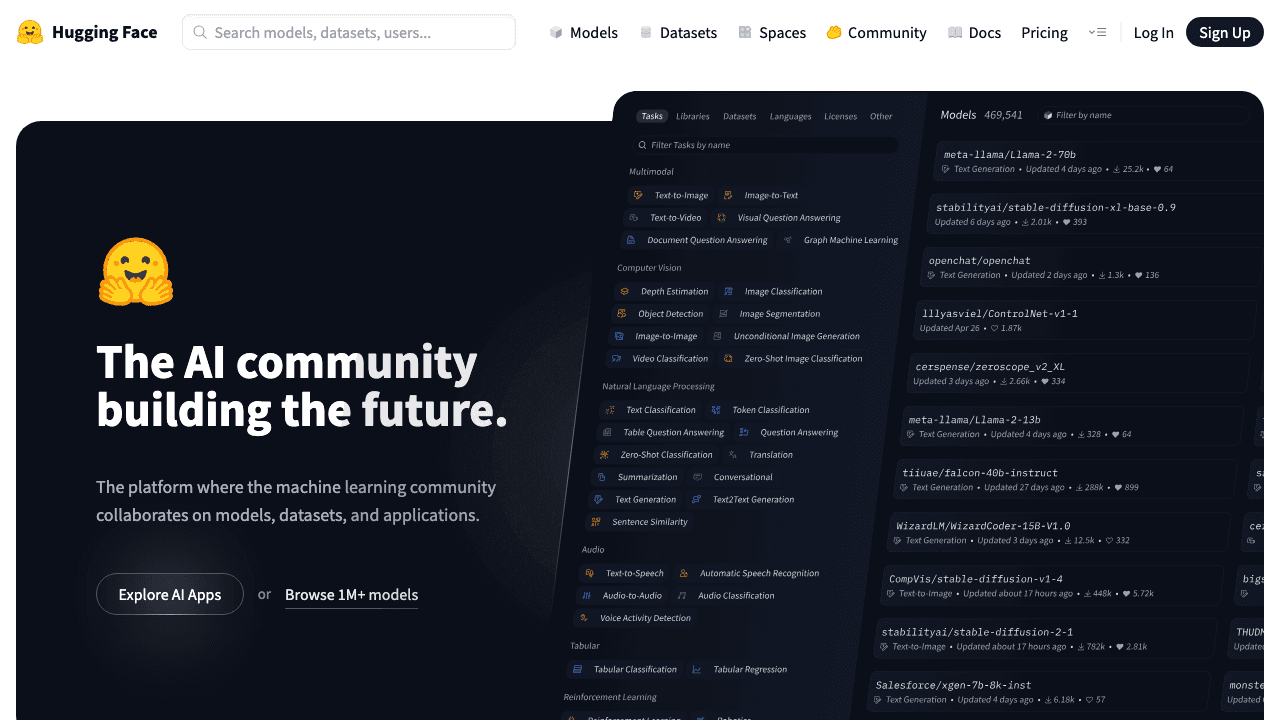

Hugging Face

The AI community and model hub

AI coding tool to accelerate development. See why developers choose this.

The AI community and model hub

AI coding tool to accelerate development. See why developers choose this.

Engineered for the frontier of app development

Ampcode: AI coding agent for autonomous app development. Agentic code generation, review, image editing, and interactive walkthroughs in terminal and editors.

Privacy-focused AI code completion

AI coding tool to accelerate development. See why developers choose this.

Run open-source LLMs locally on your machine (Llama, Mistral, Gemma)

AI coding tool to accelerate development. See why developers choose this.

Launch your SaaS in days, not months

Next.js SaaS boilerplate with AI integration and auth. Authentication, Stripe payments, database included. Launch production SaaS startups 10x faster.

Build custom AI agents with no code

AI writing tool for better content. Join writers saving hours daily.

Buy and sell micro SaaS businesses

Productivity tool powered by AI. Work smarter, not harder.

Turn videos into 27 pieces of content instantly

AI video tool for content creators. Make videos 10x faster.

Collect and display customer testimonials with AI

Powerful AI tool to boost productivity. Compare & discover alternatives.

Create ultra-realistic AI voices and speech

Powerful AI tool to boost productivity. Compare & discover alternatives.

AI SEO Content Writer

AI writing tool for better content. Join writers saving hours daily.

Find your dream remote job without the hassle

Productivity tool powered by AI. Work smarter, not harder.

After months with Claude Code, I've discovered six strategies that reliably work. Forget autonomous loops - here's what actually works for production code.

Super Bowl AI ads signal the bubble's end. Companies burning billions in losses are desperately trying to stave off the inevitable crash - just like 2000.

I tested Ampcode on production refactors for a month. It's faster than Claude Code for big changes, but requires careful review. Here's what I learned.

AI-powered meeting assistant for productivity and collaboration

AI-powered search, summaries, and automation for Slack

All-in-one AI platform for creating courses, communities, and branded websites

Create landing pages, funnels, and courses from one prompt with AI

Meeting minutes and task extraction

Detailed conversation insight summaries

Meta enhanced meeting assistant

Meeting analytics, emotion detection, and summaries

AI model that creates realistic and imaginative video from text

Create & Share Videos That Convert

AI Task Manager & Calendar Optimizer

Markerless motion capture powered by AI

Transform videos into immersive 3D environments

AI video generation for everyone

Professional AI voice and video presentation platform

AI video and voice generation platform

AI video repurposing for social media

Turn scripts into videos automatically

AI video generation for creative expression

AI video generator with talking avatars

Transform photos into AI art - Ghibli anime, sketches, and custom styles in seconds

Design Anything, Publish Anywhere

AI Image Editor

Design Tool

3D Editing Tool

AI-generated game assets in your art style

AI-powered creative suite for photo and video

Capture and create photorealistic 3D with AI