Google just made Gemini 3 Flash the default model across its entire ecosystem. It's now in the Gemini app, Google Search, and available to developers. The benchmark numbers look impressive. Companies like JetBrains and Cursor are already using it.

But here's the thing: I've been using Claude Code CLI for over a year, building production healthcare software. I don't switch tools because of benchmark scores. I switch when something meaningfully improves my actual workflow.

Let's cut through the marketing and figure out if Gemini 3 Flash actually matters.

What Google Claims

Gemini 3 Flash is Google's "frontier intelligence built for speed at a fraction of the cost." It's supposed to match Gemini 3 Pro performance while being 3x faster and costing 1/4 as much.

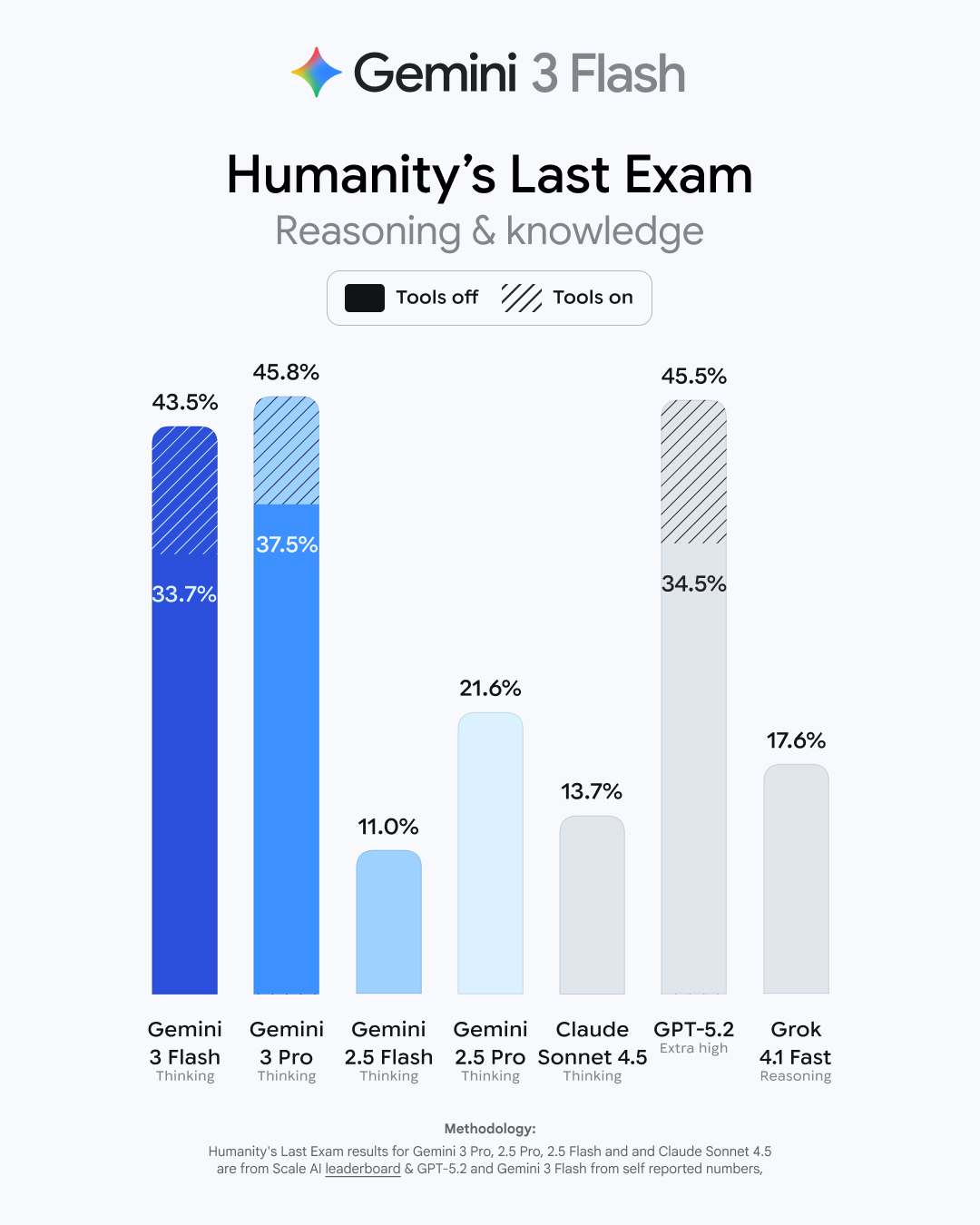

The benchmark numbers:

- 90.4% on GPQA Diamond (PhD-level scientific knowledge)

- 33.7% on Humanity's Last Exam (academic reasoning without tools)

- 81.2% on MMMU Pro (multimodal reasoning)

- Outperforms Gemini 2.5 Pro on many benchmarks

Pricing is $0.50 per 1M input tokens and $3.00 per 1M output tokens. That's more expensive than Gemini 2.5 Flash but cheaper than Pro.

Google released this on December 17, 2025, and immediately made it the default everywhere. That's fast, even for Google.

The Real Context: Google vs OpenAI

This isn't happening in a vacuum. After Google released Gemini 3 Pro in November, OpenAI reportedly entered "code red" mode due to a drop in ChatGPT traffic. Then OpenAI rushed out GPT-5.2 (which I wrote about recently - it has an 8.4% hallucination rate and didn't impress me).

Now Google's back with Gemini 3 Flash, making it the default for billions of users overnight. This is the AI wars in real time.

What Actually Matters for Developers

Look, I don't care who wins the benchmark Olympics. I care about:

- Does it help me write better code faster?

- Is it reliable enough for production work?

- Does it integrate into my actual workflow?

- What does it cost when I'm using it all day?

For Gemini 3 Flash specifically:

The Good Stuff

Multi-file reasoning: Gemini has always been decent at understanding relationships across files. If Flash maintains that at 3x speed, that's actually useful.

Multimodal capabilities: Being able to paste screenshots of error messages or UI mockups and get relevant code is legitimately helpful. The 81.2% MMMU Pro score suggests this works well.

Cost at scale: For companies running AI across thousands of queries, going from Pro to Flash pricing while keeping performance is real money saved.

Speed: 3x faster than Gemini 2.5 Pro matters when you're iterating on code and waiting 30 seconds vs 10 seconds compounds over a day.

Available everywhere: Being the default in the Gemini app and Search means more people can try it without setup friction.

The Problems

It's still Gemini: I've tried Gemini before. The web interface is fine for quick questions, but it's not integrated into my terminal workflow like Claude Code CLI. I'm not copying and pasting code back and forth.

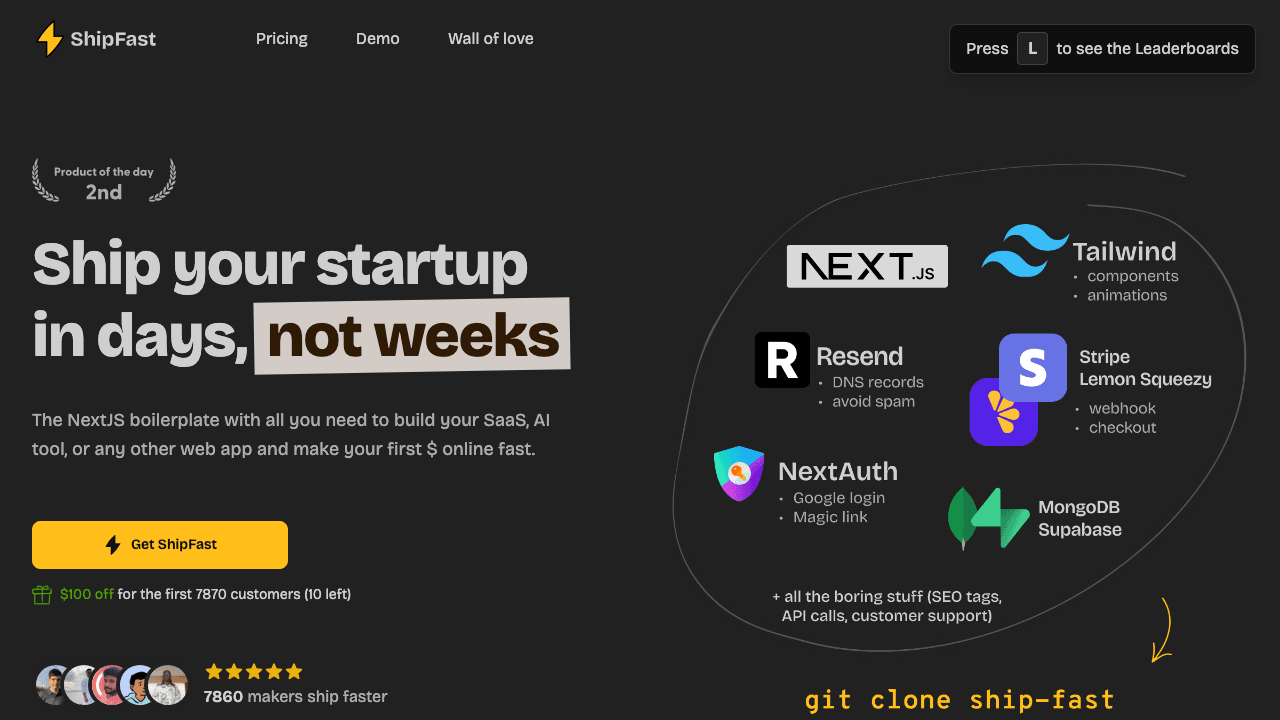

No terminal tool: Google has "Gemini CLI," but it's not the same as Claude Code's codebase-aware, file-editing, git-integrated workflow. Until Google ships something that actually lives in my terminal and makes changes in my repo, this doesn't compete with my current setup.

Benchmark skepticism: Humanity's Last Exam score of 33.7% sounds impressive until you realize that's still barely 1 in 3 questions correct. These benchmarks often don't translate to "writes production code that doesn't break."

The rush: Google made this the default immediately. That's either extreme confidence or competitive pressure. Given the OpenAI "code red" context, I'm betting on pressure. I prefer tools that have been battle-tested.

The Honest Take

If you're already using ChatGPT or Gemini, the upgrade to Gemini 3 Flash is worth it. It's faster, wiser, and now free for everyone using Gemini.

If you're a developer using Claude Code CLI like me, Gemini 3 Flash doesn't change the game. It's a better web interface, but I don't work in web interfaces. I work in terminals with actual code files.

If you're at a company using the Gemini API at scale, the cost savings are real. 3x faster at 1/4 the price adds up quickly.

Where Gemini 3 Flash Could Matter

There are specific scenarios where Gemini 3 Flash makes sense:

Research and quick questions: If you're using AI for "explain this concept" or "what's the best approach here," Gemini in Search or the app is actually convenient. It's right there when you search.

Multimodal work: If you're doing a lot of image analysis, video understanding, or working with PDFs, Gemini's strong multimodal capabilities are valuable.

Cost-sensitive scaling: If you're a startup running thousands of AI queries and watching costs, switching from Gemini Pro to Flash while keeping quality is a win.

Google ecosystem users: If you're already deep in Google Workspace, having AI integrated across Search, Docs, and Gmail is convenient.

What I'm Watching

I'm not switching from Claude Code CLI. But I'm curious about a few things:

-

Will Google ship a proper terminal-integrated coding tool? If they do something like Claude Code that actually lives in my workflow, I'll try it.

-

How do the hallucination rates compare? Benchmarks don't measure "how often does it confidently give me wrong code." That's what matters in production.

-

Will companies actually use this? JetBrains, Cursor, and Figma are mentioned as early adopters. I want to see how it performs after 3-6 months of real usage.

-

What's the catch? This level of performance at this price made default immediately - something doesn't add up. Either Google is taking a loss to compete with OpenAI, or the quality isn't as advertised.

Bottom Line

Gemini 3 Flash is a good upgrade if you're in the Google ecosystem. The benchmarks look solid, the speed increase is real, and the cost savings matter at scale.

But for developers who care about workflow integration more than benchmark scores, it's just another web interface. Until Google ships a terminal tool that actually competes with Claude Code CLI, Gemini Flash doesn't change my daily work.

The AI model wars are entertaining to watch, but they're not why I ship better code. I ship better code because I've found tools that fit how I actually work, not because the company won this month's benchmark battle.

If Gemini 3 Flash fits your workflow, use it. If Claude Code fits your workflow, keep using that. If GPT-5.2 fits your workflow, consider reconsidering it given its hallucination rate.

The best AI tool is still the one that gets out of your way and lets you write code.